Typical operations run on GPU: projections, interpolations, texture mapping. Mathematically speaking: matrix/vector products and algebraic operations.

Shaders have to repeat these operations for each vertex in the scene or for each pixel in the image ⇒ hardware is optimized to run these operations on many elements (vertices/pixels) at at the same time.

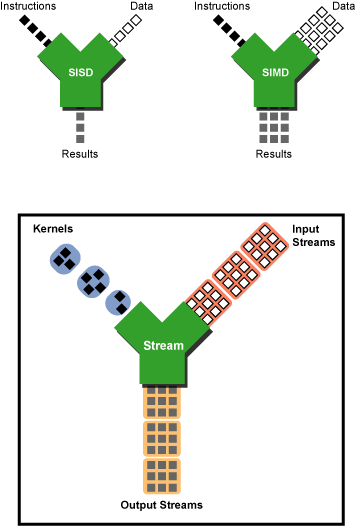

Concept: stream processing. A series of operations (kernel) acts on each element of a data set (stream). Example: a loop that operates over each element of a vector.

float stuff[num_els];

for (unsigned int i=0; i < num_els; ++i) {

/* do something on stuff[i] */

}The kernel is the body of the loop, the stream is the data vector

stuff[].

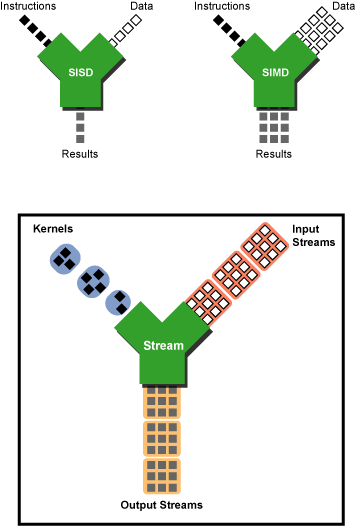

If the same sets of operations can act independently on each element, we have uniform streaming: Single Instruction Multiple Data (SIMD) approach. Parallel computing hardware is optimized for this.

Most 3D graphic operations are uniform stream processing, GPU hardware is optimized for them ⇒ GPUs are high-performance parallel processing units.

What if we could use this principle to offload more computations to the GPU, especially when the GPU is not being used for intensive rendering?

General-purpose Programming on the GPU (GPGPU): use of the GPU as a (math) coprocessor.

How does it work?

Math problem ⇒ find equivalent rendering problem ⇒ code 3D scene, appropriate vertex and fragment shading ⇒ render scene ⇒ read back the image ⇒ interpret it as the result.

Very limited:

Yet quite effective: could simulate simple particles systems with 1,000,000 particles at 20 fps (2004).

Libraries developed to assist in scientific programming using OpenGL and Direct3D: BrookGPU (Stanford U.), Sh (U. of Waterloo).

Reference: GPGPU website

End of 2006/Beginning of 2007: ATI and NVIDIA release new hardware and software architectures with native support for GPGPU

ATI CTM (Close To Metal)/Stream:

NVIDIA CUDA (Compute Unified Driver Architecture):

Similar hardware principles.

Similar software principles. Example for a vector sum:

vector1, vector2vector1, vector2 to the GPUvector1[i],

vector2[i]) in parallelHardware differences (NV G200, ATI R700):

NVIDIA: multiprocessor decodes one instruction, instruction goes 4 times over the 8 ALUs for different data ⇒ 32 threads per warp.

ATI: multiprocessor decodes one 5-way VLIW instruction, instructions goes 4 times over the 80 ALUs for different data ⇒ 64 threads per wavefront.

NVIDIA: very fine thread parallelization granularity, complex scheduler, simpler compiler.

ATI: coarser thread granularity, very simple scheduler, complex compiler.

NVIDIA: lots of GPU dye dedicated to fixed functions (simpler 3D rendering components, not used for GPGPU).

ATI: most of the GPU dye dedicated to shaders (the parts used for the GPGPU).

ATI hardware is technically superior (1200 GFLOPS vs 600 GFLOPS), however:

took NVIDIA CUDA on the lead. (i.e., NV has better software and documentation, ATI has better hardware, but the better hardware is much more difficult to exploit).

Standardization for stream programming resulted in the OpenCL (Open Computing Language) spec. It is to be expected that interfaces will converge to OpenCL, although differences in the higher-level runtime interfaces will probably remain.

OpenCL is very similar to low-level CUDA programming, but CUDA offers easy high-level interface, and we will start by learning this.

Many of the peculiarities (with their up and downsides) of the GPUs as computing platforms are tightly related to their origin as sophisticated 3D animated scene renderers. We enumerate them here, and will discuss them in more detail during the course of the project.

Memory latency: multiple warps per MP ⇒ memory access latency for some warps can be covered by computation time on other warps … if there are lots of computations and few memory accesses!

Aligned and coalesced memory requests reduce number of accesses, improve latency.

Aligned: 32, 64 or 128 (reading a float4 is faster than reading a

float3, so wasting one 32-bit word might me more efficient)

Coalesced: sequential, continguous, aligned

For shared memory: access as fast as register access if no bank conflicts happen. Bank conflicts happen if different threads access data in the same bank, unless everybody accesses data in the same bank (broadcast).

Write access: threads should write to different areas. If two threads access the same area, at least one is guaranteed to succeed (so the datum will be properly updated, but we don't know by whom).

More recent cards: atomic write operations (increment, decrement, add, compare, etc). Access is slow! (e.g. just counting the number of interactions in a particle system slows down the simulation by about 10-20%)

Optimal GPU performance is achieved by:

Computationally dense kernels: kernels that have a high computations-to-memory access ratio. GPUs are high-performance parallel computing, not memory transfer platforms.

Optimal memory access patterns: coalesced, no bank conflict. Design your data structures appropriately.

GPU saturation: keep the ALUs busy! You will not see computations scale correspondingly otherwise (e.g. a particle system with less than 5000 particles will run just the same on a G80 (first generation NVIDIA) and on a G200 (third generation NVIDIA) because the G200 is not saturated.

Modern GPUs are high-performance parallel computing devices.

OpenCL and its precursors (CTM/Stream and CUDA) allow their use as high-end math coprocessors with relative ease of development.

Widespread ‘hardcore’ gaming keeps the price for gigaflop of these GPUs quite low ⇒ HPC for scientific applications is cheap because of this!

However: gaming is still the main (commercial) reason behind the existence and development of GPUs, we cannot expect to have improvements in the platforms if their benefits are only for their application for scientific (or other forms of generic) computing.

Example: