The fundamental concepts of GPGPU are independent of the specific hardware (and thus common to CUDA and OpenCL), aside from semantic differences.

OpenCL system architecture: a host with one or more connected devices. Each device has its own memory and its own compute units.

runs the main program, which controls the devices with appropriate commands;

where the main computations are executed; examples: GPU, DSP;

device components that actually run the computations;

example: multiprocessors of a GPU;

functions (sequences of instructions) to be executed on a device; multiple instances of a kernel may be launched concurrently;

a work-item executes a single kernel instance;

the collection of all work-items issued in one invokation of a kernel; may be 1D, 2D or 3D for ease of indexing;

group of work-items; each work-group executes on a compute unit; work-items in a work-group can cooperate (synchronize and exchange information).

simple form of (embarrassing) data-based parallelism, with reduced (or no) explicit management of the compute units.

Requirements:

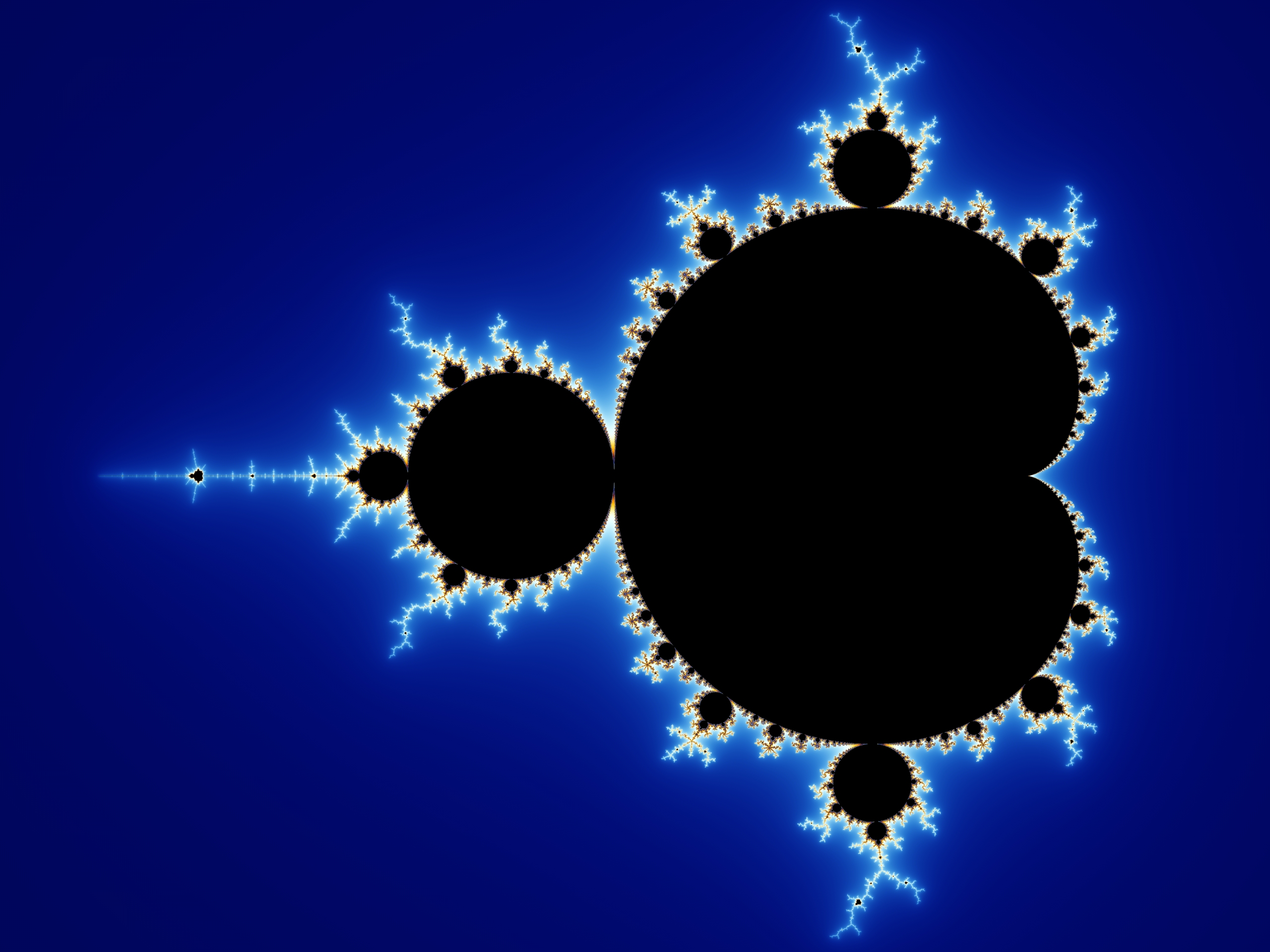

Locus of complex points such that the sequence or does not diverge. (Diverging points are often colored by escape velocity.)

int nrows, ncols, maxiter;

float xmin, xmax, ymin, ymax;

int *bitmap;

for (int row = 0; row < nrows; ++row) {

for (int col = 0; col < ncols; ++col) {

complex z, c;

int iter;

c.real = xmin + col*(xmax - xmin)/ncols;

c.img = ymin + row*(ymax - ymin)/nrows;

z = c;

for (iter = 0; iter < maxiter; ++iter) {

z = add(mul(z, z), c); /* z_{n+1} = z_n^2 + c */

if (norm(z) > 2)

break;

}

bitmap[row*ncols + col] = !!(iter < maxiter);

}

}What’s the heart of the algorithm?

complex z, c;

int iter;

c.real = xmin + col*(xmax - xmin)/ncols;

c.img = ymin + row*(ymax - ymin)/nrows;

z = c;

for (iter = 0; iter < maxiter; ++iter) {

z = add(mul(z, z), c); /* z_{n+1} = z_n^2 + c */

if (norm(z) > 2)

break;

}

bitmap[row*ncols + col] = !!(iter < maxiter);This would be the body of the mandelbrot() kernel.

Compute kernels are the ‘heart’ of the algorithm, to be applied independently to each element of the input data streams (e.g. in Mandelbrot, to each position), producing independent elements of the output data streams (e.g. in Mandelbrot, the resulting pixels).

run kernel mandelbrot on a 2D launch grid with nrows×ncols work-items;

issue as many work-items as possible at a time to process the data set.

No (or very little) manual (programmer) management of the creation and destruction of the work-items (or the hardware threads they belong to).

How does each work-item know which one it is (e.g. which row, col)?

hardware- (or driver-) provided counters.

language-specific function or variable (e.g. get_global_id() in OpenCL)

A device has different kinds of memory:

main device memory; accessible by all work-items in a kernel; persistent across kernel launches;

read-only on device, written by host; persistent across kernel launches;

global memory accessed via the GPU texture engines; offers multi-dimensional indexing, coordinate normalization, data normalization, spatial caching, (bi)linear interpolation;

reserved work-group memory; accessible by all work-items in a work-group; volatile (scope: kernel launch);

private to each work-item; volatile; held in the registers of the compute unit, unless too much is being used, in which case it spills into global memory (register spills are what CUDA calls local memory) and performance suffers.

The typical GPGPU program follows this structure:

the device(s) to use (CUDA runtime can do this behind the scenes, in OpenCL this must be done manually);

the initial data for the problem to the device(s);

one or more kernel(s) on the device(s);

the final results from the device(s).

Consider a modern, middle- or high-range setup.

Host memory bandwidth: around 50 GB/s.

Device memory bandwidth: 300–500 GB/s.

What about data transfers between the host and the device?

PCI-E 3.0 x16: less than 16GB/s in the ideal case:

Even the upcoming NVLink (64GB/s theoretical peak) has about an order of magnitude less than device VRAM bandwidth.

A GPGPU program has two parts:

runs on the CPU (starting from main()), manages the resources, handles I/O, tells the device(s) to run kernels;

collection of kernels that are uploaded to the device(s), issued by the host code.

the same source code holds both the host and the device code; requires a specific compiler to produce an executable that includes both the host and device part of the program;

host code sources are separate from device code sources; host code is produced by (any) host compiler (gcc, clang, msvc, icc, etc), device code is produced by a distinct library, offline (before program execution) or at run-time.

Single source

Separate source

GPUs are designed for stream computing

SPMD/SIMT hardware: wide compute cores with limited flow control capabilities;

high bandwidth, high latency global memory: can serve data at high speed with the right access patterns;

asynchronous host/device communication (concurrent execution on device and host, dedicated copy engines, etc).

you use the SIMD units by writing the equivalent of

float4 a, b, c;

a = /* compute or load vector */;

b = /* compute or load vector */;

c = a + b;Limitations: must be customized for specific hardware to exploit the full SIMD width (e.g. to add 32 pairs of floats, need to use 8 float4 adds with SSE, 4 float8 adds with AVX, 2 float16 with AVX2).

you write what a single lane does:

float a, b, c;

a = /* compute or load scalar */;

b = /* compute or load scalar */;

c = a + b;and ask the GPU to run this as many times as needed. The hardware takes care of distributing the load over its compute units as needed.

A GPU multiprocessor (MP) has multiple processing elements (PE), but they do not act independently from each other (similar to SIMD lanes, but with hardware-managed scheduling). Hardware and semantic details depend on architecture.

Limited flow control: optimized for predication, lane masking, no irreducible control flow (spaghetti code), no or limited recursion.

GPUs run groups of work-item in lock-step. The group (warp in NVIDIA-speak, wavefront in AMD-speak) has a fixed size which is the SIMT width of the hardware. Even if you launch a single work-item, a full warp/wavefront will run!

Very different architecture, an Ultra-Threaded Dispatch Processor (UTDP) schedules wavefronts across all compute units.

16×5 PE/MP, 4 cycles per wavefront

16×4 PE/MP, 4 cycles per wavefront

4×16 PE/MP, 4 cycles per wavefront, 4 wavefronts at a time

GPU memory controllers: high bandwidth, high latency.

a transaction might take 100s of cycles to complete.

a single transaction can serve a whole subgroup (warp/

a “pixel”- or “vertex”-worth of data per work-item, for contiguous groups of “pixels”. How does this translate for compute?

Preferred data types: 32-bit, 64-bit or 128-bit data types, i.e. int, float, or vector type such as

and

Try to keep it aligned and contiguous.

Supported data types have alingment constraints, typically the type size.

float (size: 4 bytes) is read with a single transaction if its first byte is at an address which is a multiple of 4 (“4-byte boundary”)float2 is 2×float, but single transaction only if at 8-byte boundary.float4 is 4×float, but single transaction only if at 16-byte boundary.float3? (hint: ‘it depends’)subgroup (warp/wavefront) is served optimally for contiguous data aligned on multiple-of-data-alignment boundaries (i.e. first datum must be on a boundary which is a multiple of the number of values that the hardware can provide in a single transaction).

Consider for example a class of old GPU without global memory cache,

half-warp granularity for transactions (16 work-items). A single transaction loads:

float)float2)Two transactions load;

float4)Other architectures may have (more or less effective) global memory cache, which may help hide inefficient layout choices. But beware of relying on this too much.

float4 to float3 even if fourth component is not needed;prefer structure of arrays (SoA) over arrays of structures (AoS);

sometimes, bypassing the cache and using a good layout is better than exploiting the cache!

(Note: do use the cache(s) as much as possible, but lay out your data optimally regardless.)

Set up a particle system.

Each particle has the properties:

How do you proceed?

typedef struct particle {

float3 pos;

float3 vel;

} particle_t;

kernel void

iteration(global particle_t *particles)

{

int workitem = get_global_id(0);

float3 pos = particles[workitem].pos;

float3 vel = particles[workitem].vel;

/* do stuff */

}Results in inefficient, repeated loads.

Worst-case: 4 transactions per work-item.

typedef struct particle {

float3 pos;

float3 vel;

} particle_t;

kernel void

iteration(global particle_t *particles)

{

int workitem = get_global_id(0);

particle_t pdata = particles[workitem];

/* do stuff */

}May improve over the previous one, depending on presence/efficiency of GPU caches. The whole struct can be loaded as three float2 loads, if the compiler is smart enough. (Note that it cannot be loaded as a float4+float2 due to alignment constraints.)

Worst-case: 3 transactions per work-item.

typedef struct particle {

float4 pos; /* ignore 4th component */

float4 vel; /* ignore 4th component */

} particle_t;

kernel void

iteration(global particle_t *particles)

{

int workitem = get_global_id(0);

particle_t pdata = particles[workitem];

/* do stuff */

}As previous, but compiler knows that it can load each element of the struct as a float4. Better if you only need to load pos or vel. Cache usage might be worse.

Worst-case: 2 transactions per work-item.

kernel void

iteration(

global float4 * restrict posArray,

global float4 * restrict velArray)

{

int workitem = get_global_id(0);

float4 pos = posArray[workitem]; /* ignore 4th component */

float4 vel = velArray[workitem]; /* ignore 4th component */

/* do stuff */

}All work-items in a subgroup load pos in a single transaction and vel in another (single) transaction: two transactions load all the data for the whole warp/

you can store extra info in the 4th component, e.g. mass and density, if you need additional properties.

implicit or explicit list of commands that the host issues on a device; each device is controlled by one (or more) command queues.

Commands are executed asynchronously: the host queues the command, the device runs them as soon as possible, the host can do other stuff in the mean time.

markers associated with commands to allow the host to synchronize with the devices.

The host can wait on events, query even status, or wait until the device has completed all commands.

nvVector.h in the CUDA samples);